Figma MCP & Agentic Workflows

How Figma's MCP server and agentic workflows are changing the development landscape in 2025.

After neural networks and LLMs, Agentic workflows are the third biggest leap in functional AI in the 20th century that looks the disrupt the development landscape. Agentic workflows involve agents that live inside different applications and can communicate with one another. Agents have access to context information within their own applications and through sharing context information, can enable highly sophisticated and centralized workflows. Imagine your tools not just following instructions but actually collaborating and sharing context to help you build develop faster.

At the core of agentic workflows are Model Context Protocol (MCP) servers, which connect agents across different platforms. In 2025, organizations are rapidly adopting MCP servers to empower users with advanced development capabilities. Today we'll explores how Figma’s MCP server can benefit can be helpful for developers personally and professionally to turn ideas into real, working platforms without manual coding.

Figma is a leading design tool, widely used for creating dynamic interfaces. Previously, designers passed their designs to developers who used them as blueprints, but direct conversion to deployment-ready code was unavailable. Now, with the Dev Mode MCP server (currently in open beta), you can enable a local server in the Figma desktop app. This means that supported IDEs (VS Code, Cursor, Windsurf, Claude Code) can communicate with Figma and retrieve context information

This is a game changer as now, you can design your own website in Figma and have your IDE replicate the design in code through LLM agents like Claude or GPT using the retrieved context information. Figma offers several functions through its MCP connection that enables the sharing relevant information. These functions are as follow:

- get_code: Generates code for your Figma selection (default: React + Tailwind, but you can prompt for other frameworks).

- get_variable_defs: Returns variables and styles (colors, spacing, typography) used in your selection.

- get_code_connect_map: Maps Figma node IDs to code components in your codebase for better reuse.

- get_image: Takes a screenshot of your selection (can use real images or placeholders, toggle in settings).

- create_design_system_rules: Creates rule files to guide agents for high-quality, system-aligned code generation.

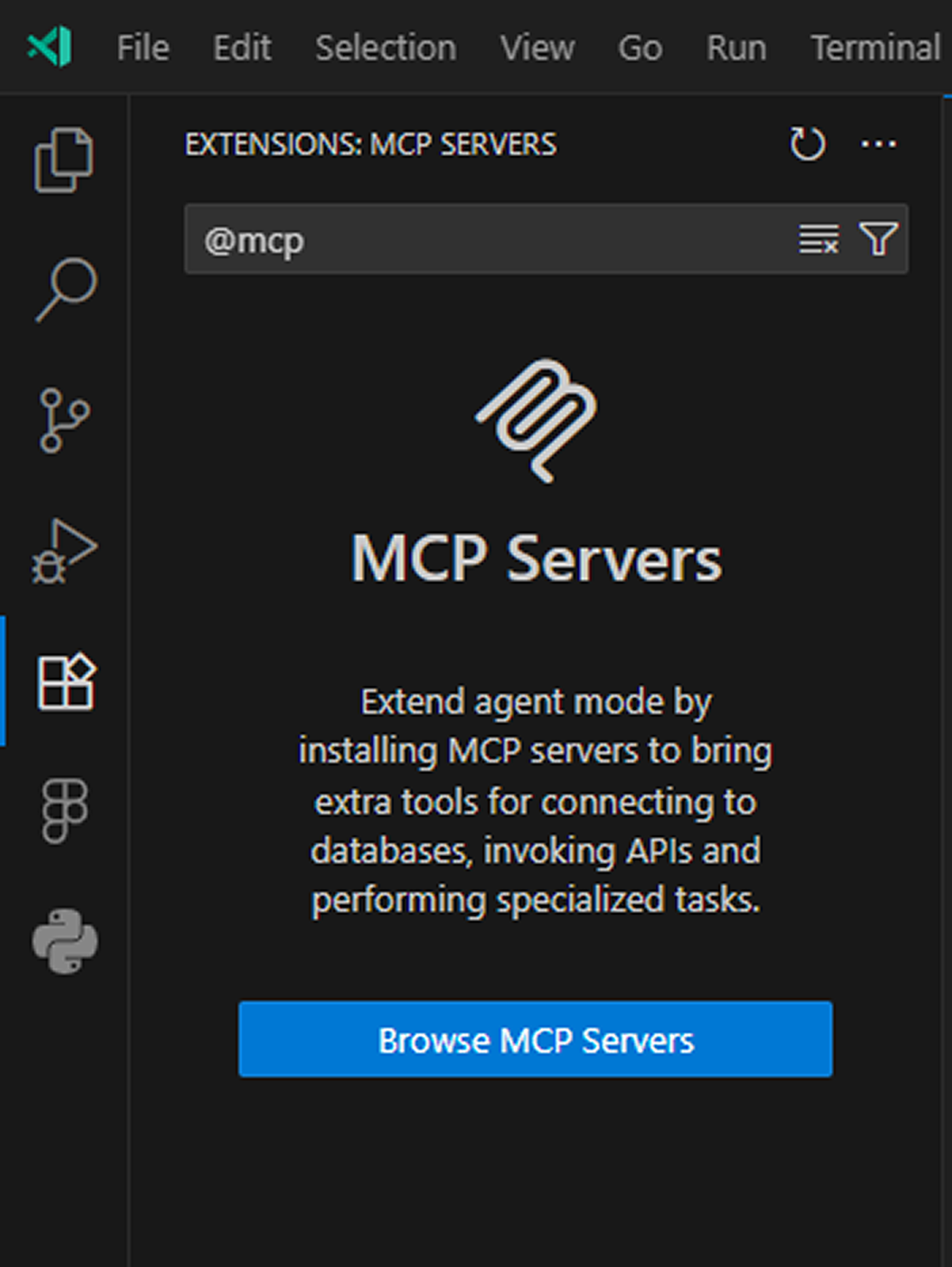

Before starting we must first configure the MCP server in Figma and connect it to our IDE. For this we will open the Figma desktop app and go to Preferences > Enable local MCP Server. Then we will go to our IDE (VS Code Insider in our example), go to extensions and type "@mcp" to get the option to browse MCP servers.

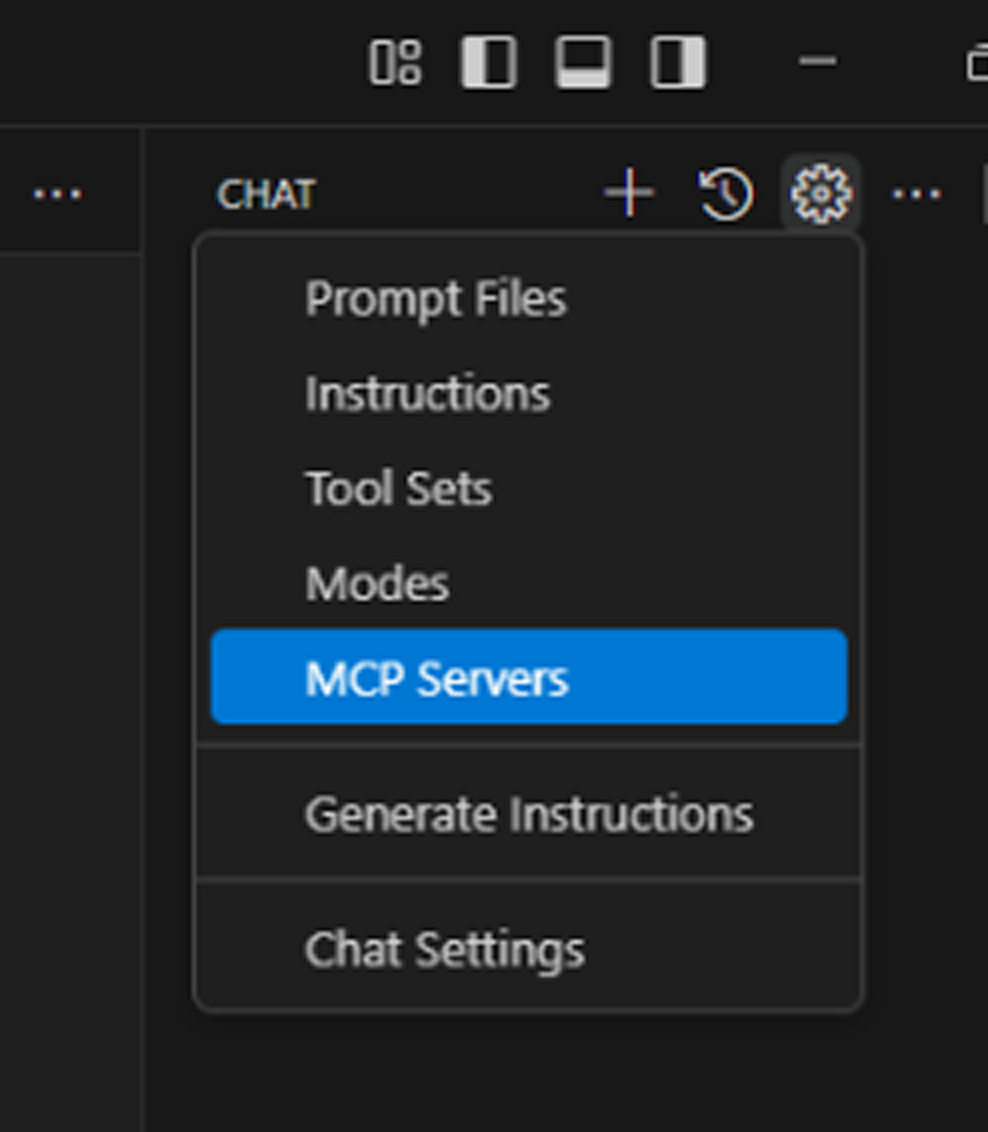

Clicking on "Browse MCP Servers" will open the browser and allow us to install the Figma MCP extension. Once installed we will go back to our IDE and inspect the extension. For this we will go to our Copilot agent settings and select MCP Servers.

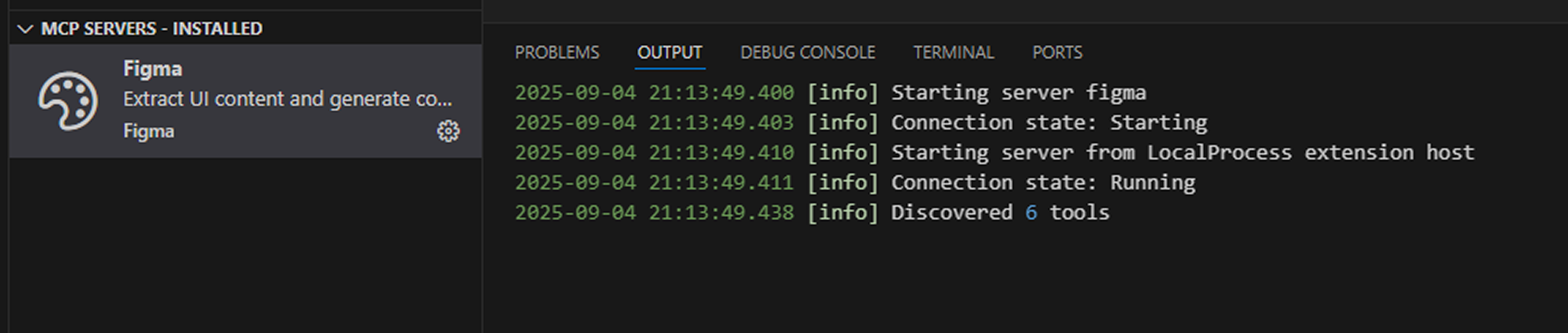

This will open the MCP server settings where we can see the newly installed Figma MCP extension. By clicking on the gear icon then Show Outputs, we can see the output logs from the MCP server. If everything is set up correctly, we should see logs indicating that the server is running and ready to accept connections.

To use this workflow:

- Connect your IDE to the MCP server and ensure GitHub Copilot is enabled.

- Create a clean, functional design in Figma using Auto Layout, standardized gaps, semantic layer names, and consistent layouts. Use components and variables for best results.

- Select a frame or layer in Figma, then prompt your AI agent (e.g., “Generate my Figma selection in HTML + CSS”). Ensure that the LLM Mode is set to "Agent".

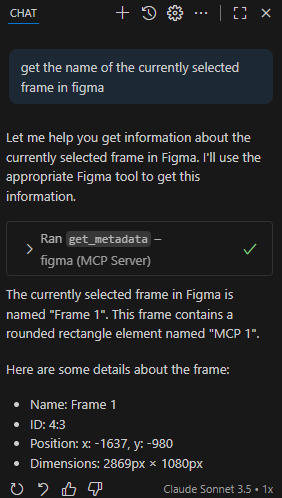

To demonstrate this workflow, I created a simple frame in Figma and asked my AI Agent to get the name of the currently selected frame. As you can see below, the agent was able to successfully retrieve the name of the selected frame.

There are some important details that must be ensured for this workflow to function as well as possible:

- As hinted previously the design frames in Figma must be as clean as possible. This helps LLM agents to understand the information more effectively. Having a messy frame is the equivalent to having a model run on noisy data.

- MCP connections often struggle with very large context information. As such it would be more effective to not have extremely large frames. Images can be replaced with empty placeholder frames as well so that the image does not need to be communicated. The placeholder can later be replaced from with the IDE.

- Some IDEs like VS Code are not deployed with the most cutting edge tools until they are production ready and as stable as possible. As such, it is recommended to use pre-release versions like VS Code Insider that come with early features relevant for our workflow.

The MCP server is in open beta and may change. Critically, one should remember the limitations and probabilistic nature of LLMs. It is unlikely that complicated designs will be perfectly replicated in the first pass. Once the first iteration is received, the user should inspect the result and provide feedback to refine the codebase until it is up to standard.

In summary, Figma’s MCP server and agentic workflows are making it easier than ever to turn your creative ideas into real, working code. Whether you’re a hobbyist, student, or pro, these tools make building, experimenting, and sharing faster and easier than ever.